UTDrive Corpus Classical

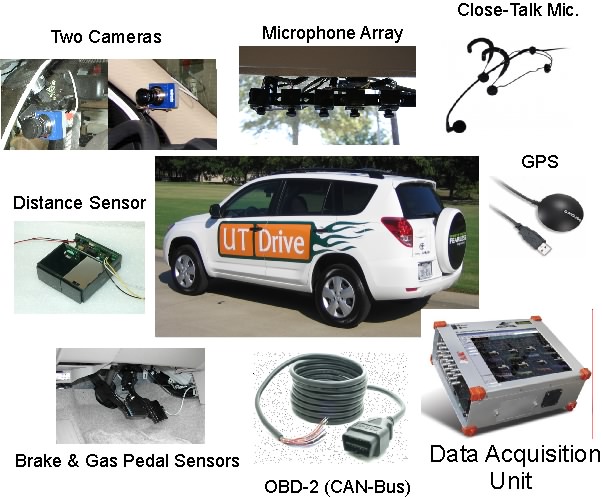

The vehicle used in data collection is a TOYOTA RAV4 customized with a variety of input channels for multi-modal data acquisition on cruise. Recorded data include audio (microphone array and close-talk microphone, six channels in total), video, pedal pressures, following distance, CAN-Bus information and GPS information. From these channels audio and video are very rich in information content and this can be reached by further analysis such as stress level measurement in speech signal and driver’s eye and head movements for gaze analysis. Data can be interpreted at multi-levels and can be fused with different combinations of signals to open new areas in inter-disciplinary driver behaviour analysis research and to bring deeper understandings in driver behavior, resulting in new in-vehicle technologies. The corpus includes 37 female, 40 male drivers data at the moment.

Check UTDrive Corpus Documentation

Download: UTDrive_Corpus_Classical

CU-Move Corpus

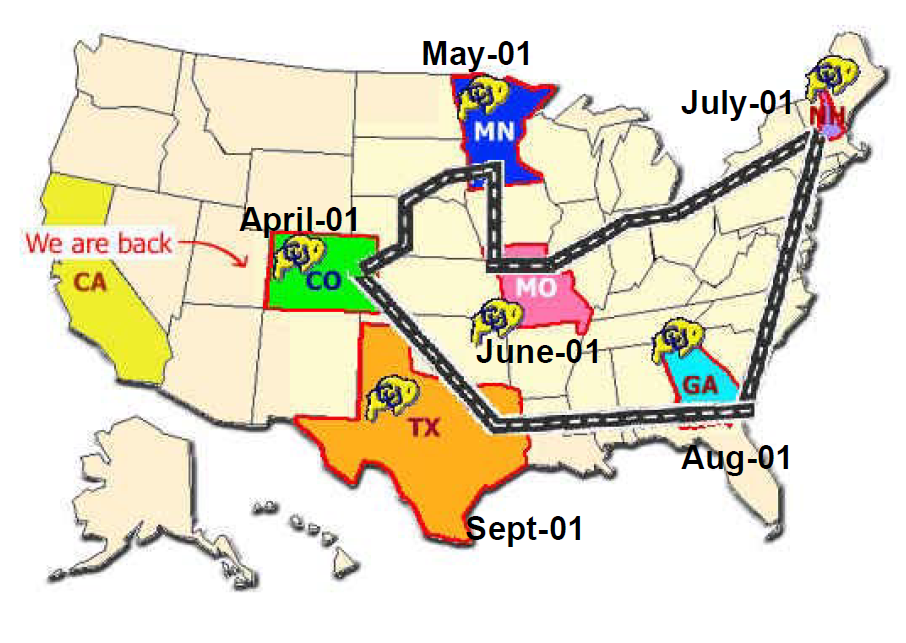

The goal of the CU-Move project is to develop algorithms and technology for robust access to information via spoken dialog systems in mobile, hands free environments. The novel aspects include the formulation of a new microphone array and multi-channel noise suppression front-end, corpus development for speech and acoustic vehicle conditions, environmental classification for changing in-vehicle noise conditions, and a back-end dialog navigation information retrieval sub-system connected to the WWW. While previous attempts at in-vehicle speech systems have generally focused on isolated command words to set radio frequencies, temperature control, etc.,the CU-Move system is focused on natural conversational interaction between the user and in-vehicle system. Since previous studies in speech recognition have shown significant loses in performance when speakers are under task or emotional stress, it is important to develop conversational systems that minimize operator stress for the driver. System advances include intelligent microphone arrays, auditory and speaker based constrained speech enhancement methods, environmental noise characterization, and speech recognizer model adaptation methods for changing acoustic conditions in the car. Our initial prototype system allows users to get driving directions for the Boulder area via a hands free cell phone, while driving in a car.

Check CU-Move Corpus Documentation

UTDrive Corpus Portable

In addition to the classical data collection approach with expensive equipment, the use of smart portable devices in vehicles creates the possibility to record useful data and helps develop a better understanding of driving behavior. Smartphones contain a variety of useful sensors such as cameras, microphones, accelerometer, gyroscope, and GPS, which could potentially be leveraged as a cost effective approach for in-vehicle data collection, monitoring, and added safety options/feedback strategies. Moreover, these multi-channel signals would also be synchronized together, providing a comprehensive description of driving scenarios. Portable mobile platforms lower the entry barrier and allow for a wider range of naturalistic data collection opportunities for vehicles and driver’s operating their own vehicles.